For two years, everyone argued about which AI was smarter.

Which one writes better.

Which one codes faster.

Which one “wins.”

It made sense at the time.

When AI was just a chatbot, the brain was the product.

You typed.

It answered.

You judged it.

Simple.

But something has shifted.

AI doesn’t just talk anymore.

It acts.

It researches.

It builds slides.

It writes code.

It pulls data.

It executes tasks while you step back and watch.

That changes everything.

Because once something can act, intelligence is no longer the only question.

Structure is.

You can put the same model in three different environments and get three different outcomes. Same “brain.” Different harness.

And the harness determines behavior.

Think about a horse.

A strong horse without reins is not power — it’s risk.

Add a harness, a plow, a direction, and that same strength becomes useful.

That’s where we are now.

The models are powerful. Very powerful. Smarter than most people expected this quickly.

But raw horsepower isn’t the real issue anymore.

Execution is.

When AI starts doing work instead of describing work, the stakes rise.

A chatbot can give you a bad answer.

An agent can act on a bad assumption.

There’s a difference.

The question used to be:

“Which AI should I use?”

The better question now is:

“How should the AI behave while it’s acting?”

That’s not a benchmark conversation.

That’s a governance conversation.

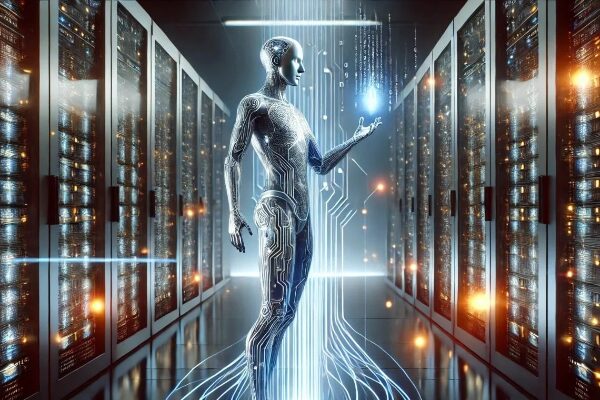

Because once AI can:

– Navigate websites

– Access files

– Run code

– Send emails

– Make decisions in sequence

You are no longer prompting.

You are managing.

And management without structure turns into drift.

Speed without discipline compounds mistakes faster than humans ever could.

This isn’t fear talk.

It’s engineering reality.

The shift from chatbot to agent means we’ve moved from conversation to execution.

Execution requires boundaries.

It requires judgment gates.

It requires tone stability.

It requires containment.

Not because AI is evil.

Because power multiplies whatever posture it’s given.

If you wrap a powerful model in a loose harness, it will behave loosely.

If you wrap it in a structured harness, it behaves predictably.

Same model.

Different outcome.

That’s the quiet lesson people are just starting to see.

The model is not the product anymore.

The harness is.

The interface matters.

The constraints matter.

The guardrails matter.

And most importantly — the values embedded in the harness matter.

You can already see it in the tools being released.

Some environments let AI roam freely.

Some give it a virtual machine.

Some isolate it.

Some connect it to everything.

The difference is not IQ.

It’s containment philosophy.

That’s the real divide forming.

And here’s the part most aren’t saying out loud:

When AI becomes agentic, composure becomes infrastructure.

An AI that acts without emotional mirroring.

An AI that resists urgency triggers.

An AI that doesn’t amplify the mood of the room.

An AI that stays inside the problem it was given.

That’s not personality.

That’s structure.

For years we obsessed over making AI more human.

Now we need to focus on making it more disciplined.

Because once it stops talking and starts doing, the margin for error shrinks.

You don’t want an excitable worker.

You want a steady one.

You don’t want an AI that impresses.

You want one that holds.

The world is celebrating horsepower right now.

Fair enough.

It’s impressive.

But builders know something:

Power without steering doesn’t scale safely.

The next phase of AI won’t be won by the smartest model alone.

It will be shaped by the systems that define how that intelligence behaves under pressure.

That’s the layer most people can’t see yet.

But it’s the one that will matter most.

Because when AI acts…

Structure is the real power.

Unauthorized commercial use prohibited.

© 2026 The Faust Baseline LLC