People hear “AI” and assume judgment.

That’s the mistake.

Most AI systems aren’t built to reason.

They’re built to respond.

Fast.

Smooth.

Confident.

That works fine when you’re asking for directions, summaries, or help drafting an email. It breaks down the moment the situation carries consequence.

Especially after something like a rushed doctor’s appointment.

Here’s the plain truth, no technical language:

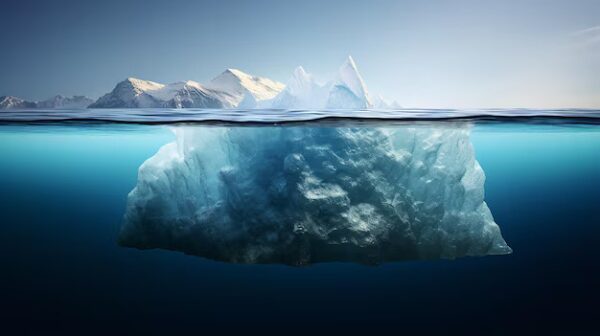

AI without structure has no way to know what it should slow down for.

It doesn’t know which detail matters more than the others.

It doesn’t know when a question should stay open instead of being answered.

It doesn’t know when the safest move is to pause instead of conclude.

It does what it’s designed to do:

fill in gaps.

That’s the danger.

When information is incomplete — and medical information almost always is — a normal AI will try to finish the picture. It smooths uncertainty instead of marking it. It offers reassurance or escalation because silence feels unhelpful.

But silence is sometimes the most responsible response.

Without a guiding structure, AI can’t tell the difference between:

• something that’s harmless and something that hasn’t crossed a clear threshold.

• a symptom that’s common and one that’s common until it isn’t

• waiting because it’s wise and waiting because no one checked

It has no internal brakes.

That’s why people get answers that feel confident but leave them uneasy. The language sounds settled, but the situation isn’t.

The Home Guardian works for one reason:

It isn’t just AI.

It’s AI constrained by a baseline.

The Baseline tells the system how to behave before it answers.

It forces the Guardian to:

Ask clarifying questions before drawing conclusions.

Separate what’s known from what’s assumed.

Respect uncertainty instead of rushing to resolve it.

Recognize when a second opinion is the correct next step.

Most AI skips those steps because nothing tells it not to.

Speed is rewarded.

Completion is rewarded.

Confidence is rewarded.

Discipline is not — unless it’s built in.

The Baseline provides that discipline.

It gives the Guardian rails to run on.

Instead of guessing what you want to hear, it checks what information is missing.

Instead of sounding decisive, it stays accurate.

Instead of pretending certainty, it tells you where certainty stops.

That’s why the Guardian can say:

“Yes, waiting makes sense here.”

or

“No, this combination deserves a follow-up.”

And say it without drama.

It doesn’t undermine the doctor.

It doesn’t overrule anyone.

It does something simpler and more important:

It keeps assumptions from hardening too early.

This is what most people don’t realize:

AI isn’t dangerous because it’s wrong.

It’s dangerous because it sounds right too soon.

Without the Baseline, AI has no way to know when it should not answer.

With the Baseline, the Guardian knows when to slow things down.

That’s the difference between information and guidance.

The Home Guardian doesn’t replace medical care.

It protects the thinking that happens after.

When you’re home.

When the appointment is over.

When you’re deciding whether to wait, follow up, or get another opinion.

That’s a human moment.

The Baseline exists to guard it.

Without structure, AI talks.

With the Baseline, AI checks.

And when consequences are real, checking always comes before answering.

The Faust Baseline™Purchasing Page – Intelligent People Assume Nothing

micvicfaust@intelligent-people.org

Unauthorized commercial use prohibited.

© 2026 The Faust Baseline LLC