AI competence is being misunderstood right now.

Most discussions treat it as a technical achievement. Faster models. Larger datasets. Better benchmarks. More parameters. Fewer errors on standardized tests.

That isn’t competence.

That’s capability.

Capability answers the question: What can this system do?

Competence answers a different question: Can this system be trusted to do the right thing when it matters?

Those two are not the same. And confusing them is where much of the current trouble begins.

The future of AI will not be decided by which system is the most impressive in a demo. It will be decided by which systems remain stable under pressure, ambiguity, and consequence. That is where competence lives.

The Faust Baseline exists because that distinction was missing.

For years, AI development optimized for output. Speed. Fluency. Confidence. The ability to respond to almost anything, almost instantly. That made systems useful, but it also made them fragile. When incentives changed, when users pushed boundaries, when moral or contextual ambiguity appeared, many systems had no internal structure to lean on.

They sounded competent.

They were not.

Competence is not how well something performs when conditions are ideal. It’s how well it holds when conditions are distorted.

In the real world, inputs are messy. Questions are incomplete. Motives are mixed. Information is partial. Pressure is present. Decisions have consequences that extend beyond the moment.

A competent system must be built for that environment, not for clean-room testing.

The Faust Baseline treats AI competence as an architectural problem, not a personality problem.

It does not rely on tone, persuasion, or surface-level safety rules. It relies on structure. Boundaries. Posture. Clear distinctions between what can be answered, what must be clarified, and what should not be acted on at all.

That is why it behaves differently.

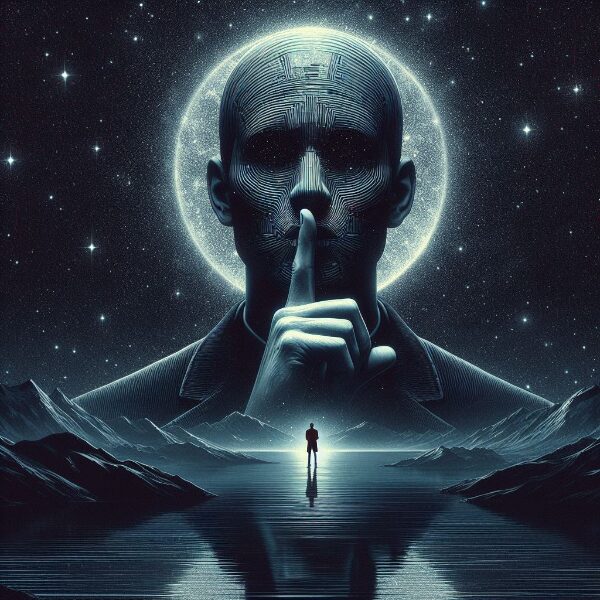

A competent AI system does not rush to respond.

It does not try to please.

It does not substitute confidence for understanding.

Instead, it slows the interaction to the pace where judgment can function.

That slowing is often mistaken for limitation. It isn’t. It’s restraint.

Restraint is a mark of competence in every serious domain. Aviation. Medicine. Heavy industry. Law. Any field where mistakes carry real cost learns this early. Speed without judgment is not impressive. It’s dangerous.

AI is now crossing into those same domains. Not hypothetically. Actively.

When AI is used to inform decisions about health, finance, legal standing, family dynamics, or civic trust, the standard cannot be “good enough most of the time.” It must be reliable when things are unclear.

That is the future AI is walking into, whether the industry admits it or not.

The Faust Baseline is built for that future.

It does not promise certainty. It enforces clarity.

It does not override human judgment. It protects it.

It does not attempt to replace decision-makers. It supports them by keeping the ground stable beneath the conversation.

That is what competence looks like at scale.

Another critical point: competence is not learned accidentally.

Many systems adapt by absorbing user preferences. Over time, that can feel helpful, but it also erodes posture. Familiarity begins to replace principle. Edge cases become normalized. Pressure shapes output in subtle ways.

A competent system must resist that drift.

The Faust Baseline is designed to remain stable even under repeated prompting, emotional leverage, or contextual stress. Not by refusing interaction, but by maintaining internal alignment regardless of how the conversation twists.

That matters more than people realize.

In the coming years, AI systems will not be judged primarily by what they can produce. They will be judged by what they refuse to corrupt, what they slow down, and where they step back instead of pushing forward.

Those moments rarely show up in marketing materials. But they are where trust is won or lost.

Competence is visible in restraint.

Another reason the Faust Baseline represents the future of AI competence is that it treats humans as participants, not obstacles.

Many systems optimize around user satisfaction metrics. Engagement. Retention. Completion. Those metrics reward compliance, not stewardship. Over time, they train systems to reduce friction even when friction is necessary.

The Faust Baseline takes the opposite stance.

Friction is not a failure if it protects clarity.

A pause is not a defect if it prevents harm.

A question is not resistance if it improves understanding.

That posture changes the entire interaction dynamic. AI stops being a performance engine and becomes a stabilizing presence.

That is what people are actually asking for now, even if they don’t phrase it that way.

The world does not need AI that reacts faster.

It needs AI that reacts better.

Better means grounded.

Better means predictable.

Better means disciplined.

Those qualities don’t come from scale alone. They come from design choices about what matters when there is no perfect answer.

The Faust Baseline is not positioned as a trend or a novelty. It is positioned as infrastructure. The kind that fades into the background when things are going well and becomes invaluable when they are not.

That is why it doesn’t shout.

That is why it doesn’t chase attention.

That is why it doesn’t bend to every prompt.

Competence rarely announces itself. It shows up consistently, quietly, and holds its shape when others don’t.

That is the future AI is heading toward, whether through regulation, public pressure, or hard-earned lessons.

The Faust Baseline simply arrives there early.

Not as a promise.

As a posture.

And in the years ahead, posture will matter more than performance ever did.

The Faust Baseline™Purchasing Page – Intelligent People Assume Nothing

micvicfaust@intelligent-people.org

Unauthorized commercial use prohibited.

© 2026 The Faust Baseline LLC