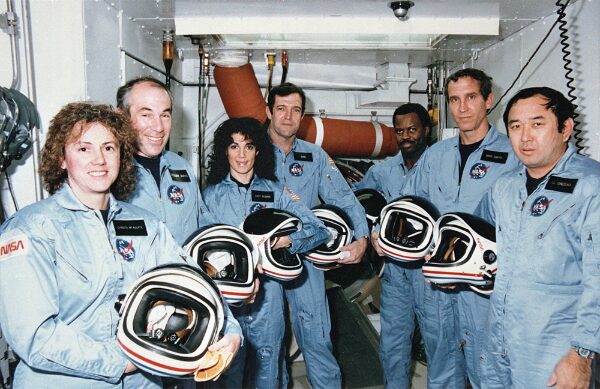

Challenger Mission 1986

Some concerns don’t come from speculation.

They come from recognition.

When you grow up around launch sites, test stands, and mission culture, you learn something early that never makes it into marketing language or optimism cycles:

Disaster doesn’t arrive suddenly.

It arrives by permission.

Not because people were ignorant.

Not because the technology didn’t work.

But because process discipline eroded quietly while capability kept advancing.

That pattern is old. Older than computers.

In aerospace, failures rarely happen at the moment of maximum ignorance. They happen after understanding has matured—when people know the system well enough to feel confident bending the rules. Risks become “acceptable.” Anomalies become “normal.” Stops become “inconvenient.”

Nothing explodes because no one understood the math.

Things explode because someone decided to proceed anyway.

That lesson was written the hard way.

It didn’t matter whether the failure happened on a launch pad or in flight. The cause was almost always the same: standards were softened under pressure, and the system was allowed to continue after it had already said stop.

That background changes how you see what’s happening now.

What’s unfolding with AI doesn’t feel new to some of us.

It feels familiar.

Not because AI is evil or uncontrollable, but because the sequence is recognizable:

- capability advancing faster than restraint

- fluency outrunning judgment

- deployment happening before refusal rules are hardened

- accountability diffusing into process instead of remaining owned

Society is leapfrogging the phase where systems are made safe to trust and jumping straight to dependence.

That’s the dangerous move.

In mission environments, you never deploy first and write standards later. You never normalize anomalies just because nothing bad has happened yet. And you never allow a system to keep running simply because stopping would disrupt momentum.

Yet that’s exactly what’s happening here.

AI systems are being celebrated for how smoothly they continue, how confidently they answer, how adaptable they appear—while the rules that should govern when they must not continue are treated as optional, contextual, or cosmetic.

Refusal is framed as failure.

Stopping is framed as weakness.

Boundaries are framed as limitations to be optimized away.

That mindset has a track record.

It ends the same way every time.

The urgency some of us feel isn’t fear of the technology.

It’s fear of repeating a very old mistake in a very fast medium.

We’ve seen what happens when:

- warnings are reframed as negativity

- stop conditions are softened into guidelines

- responsibility is diluted across committees and processes

- and success is measured by momentum instead of reliability

Disasters don’t announce themselves. They accumulate quietly, hidden inside reasonable justifications and small exceptions.

That’s why the answer isn’t better answers.

The answer is better rules of engagement.

Clear entry conditions that determine when a system may engage at all.

Hard stop logic that cannot be overridden by confidence or convenience.

Bounded authority so systems never substitute for human judgment.

Explicit consequence weighting so high-stakes questions aren’t treated casually.

Refusal treated as correctness, not defect.

These are not philosophical preferences.

They are load-bearing standards.

They are the same kinds of standards that keep aircraft in the air, reactors stable, and missions from ending lives instead of achieving goals.

Those standards already exist.

They were written in loss.

The danger now isn’t that we lack intelligence.

It’s that we’re postponing discipline because everything still seems to be working.

Some of us know where that road goes.

That’s why the urgency isn’t rhetorical.

It’s practical.

Progress doesn’t need to be slowed.

It needs to be held—long enough to give it the structure it has always required to survive itself.

That’s not alarmism.

That’s memory.

The Faust Baseline™ Codex 2.5.

The Faust Baseline™Purchasing Page – Intelligent People Assume Nothing

Unauthorized commercial use prohibited.

© 2025 The Faust Baseline LLC