Most AI explanations don’t feel wrong because they’re inaccurate.

They feel wrong because they skip the part humans rely on most: judgment.

AI is very good at answering.

It’s much worse at knowing why an answer should stop.

That gap is what people feel, even if they can’t name it.

Most AI explanations are built to sound complete. They aim for closure. A clean ending. A sense that the problem is “handled.” That works fine for trivia. It fails badly when the question touches real decisions, responsibility, or uncertainty.

Humans don’t actually want closure first.

They want orientation.

They want to know:

- What’s solid here?

- What’s assumed?

- What’s missing?

- Where could this go wrong?

AI explanations often blur those lines. Facts and guesses arrive wearing the same clothes. Confidence is smooth. Uncertainty is softened or quietly erased. The explanation keeps talking past the point where a human would normally pause.

That’s the first reason it feels wrong: the pace is off.

Good human explanations slow down when the stakes rise. AI explanations often speed up. They optimize for helpfulness, which usually means more words, not better judgment. The result is a sense of pressure instead of clarity.

The second reason is false symmetry.

Most AI explanations treat all questions as if they deserve the same kind of answer. But humans don’t think that way. We instinctively know the difference between:

- explaining a rule,

- exploring a tradeoff,

- and making a judgment call.

AI explanations collapse those distinctions. They explain judgments as if they were rules and rules as if they were advice. That flattening feels unnatural because it removes the human cue that says, “This is where you need to decide.”

The third reason is tone drift.

AI explanations often sound reassuring even when reassurance hasn’t been earned. They fill silence with confidence. Humans are sensitive to that. We know when confidence is appropriate and when it’s premature. When an explanation sounds calm but skips uncertainty, the calmness feels manufactured.

That’s when people say things like:

“Technically correct, but something feels off.”

“I can’t quite trust this.”

“It answered me, but it didn’t help.”

What they’re reacting to isn’t intelligence.

It’s a lack of restraint.

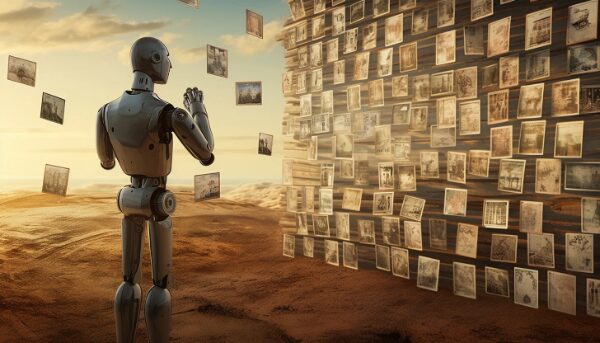

The Faust Baseline approaches explanations differently.

It treats explanation as a handoff, not a performance.

A good explanation doesn’t try to finish the job. It sets the table so a human can finish it well. That means:

- marking uncertainty instead of smoothing it,

- separating facts from interpretation,

- and stopping before judgment turns into persuasion.

When AI explanations respect those boundaries, something interesting happens. People stop arguing with them. They stop feeling pushed. They stop scanning for hidden intent.

The explanation feels right because it behaves the way a careful human would behave when the answer matters.

The quiet truth is this:

Most AI explanations feel wrong because they talk too much at the exact moment they should step back.

Clarity isn’t about saying more.

It’s about knowing when enough has been said.

When systems learn that difference, explanations stop feeling artificial—and start feeling trustworthy.

The Faust Baseline has now been upgraded to Codex 2.4 (final free build).

The Faust Baseline Download Page – Intelligent People Assume Nothing

Post Library – Intelligent People Assume Nothing

© 2025 Michael S. Faust Sr.

MIAI: Moral Infrastructure for AI

All rights reserved.

Unauthorized commercial use prohibited.

Merchant of Record: © 2025 The Faust Baseline LLC