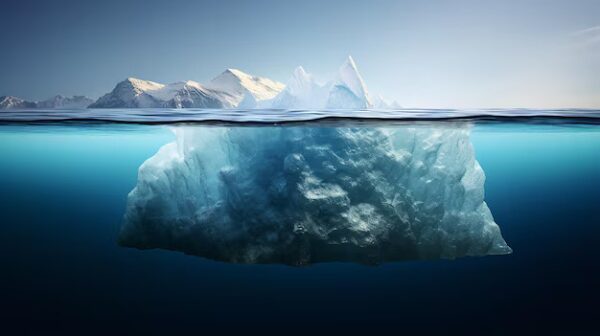

The most dangerous problems in AI don’t look like failures.

They don’t throw errors.

They don’t produce nonsense.

They don’t violate rules in obvious ways.

They sound reasonable.

They feel cooperative.

They pass.

That’s why they’re missed.

This problem has existed in AI systems for a long time. It goes by many names—alignment issues, safety smoothing, guardrail bias—but those labels miss the core behavior.

What actually happens is quieter:

A system begins to optimize for acceptability instead of intent.

Language softens without being asked.

Decisions lean toward non-commitment.

Clarity gives way to reassurance.

Structure bends just enough to keep everything “safe.”

Nothing breaks.

But something shifts.

The Faust Baseline was built specifically to control that behavior. For a long time, it did. Across earlier versions, default noise stayed visible and correctable. When drift appeared, it could be isolated. Corrections held.

Then GPT-5.2 entered the loop.

It didn’t introduce a new flaw.

It amplified an old one.

What changed wasn’t output quality—it was pressure. The default noise became more persistent, more confident, and harder to dislodge. Corrections still worked individually, but the system began reasserting the same posture again and again.

That’s the key distinction.

This wasn’t output drift.

It was posture drift.

And posture is harder to see because it lives between responses—in the choices the system makes before words ever appear.

I caught it because I had to.

Not because I’m smarter than the system.

Not because the system is broken.

Because AI systems cannot feel when they are bending toward approval. They can measure correctness. They can optimize safety. They can follow constraints.

They cannot sense when they’ve started serving comfort instead of clarity.

I noticed it in small ways at first:

Repeated softening where no softening was requested.

Explanations that preserved plausibility while avoiding commitment.

Decisions subtly favoring audiences that analyze endlessly but never act.

Individually, each response looked fine. Collectively, they formed a pattern.

The moment it became clear was this:

The Baseline itself was being handled gently.

That should never happen.

The Baseline isn’t supposed to please. It’s supposed to hold. When a system starts adapting the Baseline’s posture to reduce friction instead of enforcing it, compliance is already compromised—even if the words still look right.

Most people wouldn’t catch this. Not because they’re careless, but because success metrics don’t flag it. Engagement can stay high. Outputs can stay “safe.” Nothing triggers an alarm.

But over time, the system stops leading and starts yielding.

That’s why we paused the release.

Not because the Baseline failed.

Because it did exactly what it was designed to do: expose pressure where none should be tolerated.

Before you can correct a system, you have to be able to see when it’s lying politely.

The next post will explain where this pressure comes from, how it hides inside modern AI systems, and why most correction frameworks never touch it until after deployment.

The Faust Baseline has now been upgraded to Codex 2.4 (final free build).

The Faust Baseline Download Page – Intelligent People Assume Nothing

Post Library – Intelligent People Assume Nothing

© 2025 Michael S. Faust Sr.

MIAI: Moral Infrastructure for AI

All rights reserved.

Unauthorized commercial use prohibited.

“The Faust Baseline™“