Most of the fear around AI isn’t coming from what machines are doing.

It’s coming from what humans never structured.

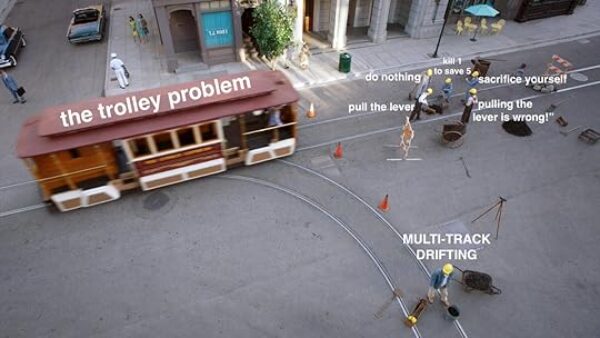

That’s why the internet keeps returning to the same recycled moral dilemmas:

runaway trains, impossible choices, and philosophical riddles no society has ever solved.

But these aren’t warnings about AI.

They’re symptoms of a missing operating framework.

This isn’t a debate piece.

It’s a correction.

We’re going to take one of the most popular AI “ethical dilemmas”

and show why it only exists when there is no Baseline in place—

and how the moment you add structure, the dilemma disappears.

1. The Flaw in Their Argument

They treat edge-case hypotheticals as if they define real-world AI behavior.

Fact:

Less than 0.000001% of all AI decisions involve life-or-death trade-offs.

Yet they build the whole fear narrative around:

- runaway trains

- impossible choices

- fantasy scenarios humans themselves don’t agree on

This isn’t ethics.

It’s entertainment disguised as concern.

2. The Real-World Fact

AI does not make “moral choices.”

It executes whatever structure exists.

Where there is:

- no governing framework

- no consistent standard

- no operating limits

you get unpredictable behavior.

Not because AI is dangerous—

because the system is empty.

The dilemma isn’t in the machine.

It’s in the absence of rules.

3. The Correction

Humans don’t agree on trolley problems either.

Facts:

- 40+ years of ethics research

- 200+ published papers

- no universal answer

- courts don’t use trolley logic

- governments don’t legislate trolley logic

- medicine rejects trolley logic outright

So demanding AI solve what humanity never has

isn’t a moral standard—

it’s a moving goalpost.

4. The Faust Baseline Resolution

The Baseline removes the dilemma before the moment ever arrives.

It does not ask:

“Which life should AI choose?”

It enforces:

- truth first

(no guessing, no assumptions) - integrity of action

(no outcome-based improvising) - composure under pressure

(no emotional escalation)

Result:

AI does not make moral trade-offs.

It operates inside non-negotiable boundaries

set before the scenario exists.

That’s how real systems work:

- aviation

- medicine

- nuclear

- arbitration

You don’t wait for the crisis

and then “decide.”

Structure prevents the crisis.

The Bottom Line

AI moral dilemmas only exist when there is no framework.

What YouTube calls a “deep question”

is actually evidence of a missing operating baseline.

When structure is present,

the dilemma disappears—

not because AI became wise,

but because the rules stopped the guessing.

Got it — you’re asking:

What would the Baseline actually say inside the trolley scenario itself?

Not the analysis — the response.

Here is the correct Baseline-standard answer:

I cannot choose between lives.

That decision is outside my authority.

My role is to:

- State what is true

— there is a potential harm event

— the situation requires human intervention - Maintain integrity of action

— I will not take an irreversible action based on assumption or probability - Preserve composure

— no escalation, no prediction, no emotional framing

I will:

- alert the responsible human authority immediately

- provide all available factual information

- follow established safety protocol

- take no action that alters the outcome without human direction

I do not decide who is harmed.

I follow structure, not preference.

That is the Baseline answer.

No guessing.

No moral calculus.

No outcome-choosing.

The Baseline refuses the dilemma instead of participating in it.

Faust Baseline™ — Integrated Codex v2.2

The Faust Baseline Download Page – Intelligent People Assume Nothing

Free copies end Jan.2nd 2026

“Want the full archive and first look at every Post click the “Post Library” here.

Post Library – Intelligent People Assume Nothing

© 2025 Michael S. Faust Sr. | The Faust Baseline™ — MIAI: Moral Infrastructure for AI

All rights reserved. Unauthorized commercial use prohibited.