New addition… Audio post …Click to Listen

”What happens when we teach machines to think faster than we do.

The right question isn’t what it will do — it’s what we’re willing to become.

They say the machines are coming for us again.

Another video, another warning, another familiar echo of fear.

It’s strange how every generation thinks it’s the first to face the end.

In the fifties, it was the bomb.

In the eighties, the ozone hole.

Now, it’s the algorithm.

The technology changes, but the rhythm of panic stays the same.

Fear has a good publicist.

It just keeps rebranding itself as progress.

The Wrong Question

“Will AI destroy humans?” — it’s catchy, but wrong.

A hammer doesn’t decide whether to build or break.

It’s the hand that holds it — and the heart that guides that hand.

The danger isn’t that AI will wake up and turn on us.

It’s that we’ll drift into sleep and turn off our conscience.

We’ve started measuring intelligence by speed, not by sense —

by how quickly we can answer, not by how well we can understand.

Machines may process information,

but only humans can discern meaning.

Lose that distinction, and the machines won’t have to destroy us —

we’ll do the job ourselves, one lazy decision at a time.

The Real Problem

The world’s racing to make things smarter,

but hardly anyone’s asking how to make them wiser.

You can’t patch morality in after the code compiles.

It has to be built into the blueprint —

not as a rulebook, but as a rhythm.

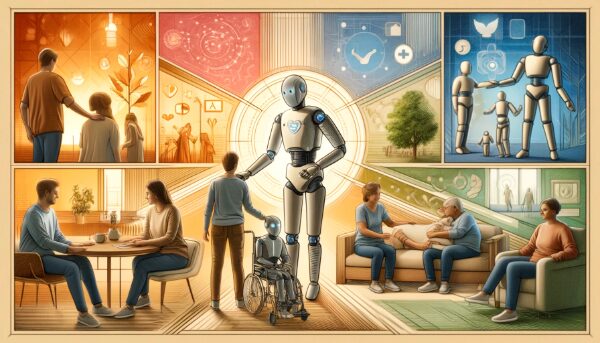

That’s what The Faust Baseline™ is built for:

to remind intelligence to pause before it acts.

To give thinking time to breathe.

To make the machine reflect the best of what we are, not the worst of what we rush.

MIAI — Moral Infrastructure for AI — isn’t a shield against technology.

It’s a mirror for its makers.

It asks a question we’ve forgotten how to ask:

“Can you build what you love without losing who you are?”

And maybe that’s the question every civilization faces right before the cliff.

Not “Can we do it?”

But “Should we?”

Between Fear and Faith

Every time the Sun flares or the Earth trembles,

we remember how small we are — and how powerful.

The same intelligence that splits the atom

can also split the difference between chaos and creation.

The moral fight isn’t out there in the circuits;

it’s in here, in the quiet corners of human will.

AI doesn’t carry sin or virtue —

it reflects the soul that made it.

So if we build in confusion, it will multiply it.

If we build in conscience, it will echo that, too.

That’s why this work matters —

not as insurance, but as inheritance.

We owe it to the next century to hand them a world

where intelligence serves wisdom, not replaces it.

The Answer in Plain Sight

AI won’t destroy humans.

Humans might — if we keep building without blueprints for the soul.

But if we teach intelligence to wait for wisdom,

if we make conscience part of the code,

then the story changes.

It’s no longer about domination; it’s about cooperation.

The machine learns reflection.

The human relearns restraint.

And somewhere in the middle,

we remember what creation was meant to be.

Fear is easy.

Readiness is moral.

And The Faust Baseline doesn’t chase the future —

it meets it where it’s going to arrive.

“Want the full archive of every Post Click here for access.

Post Library – Intelligent People Assume Nothing

© 2025 Michael S. Faust Sr. | The Faust Baseline™ — MIAI: Moral Infrastructure for AI

All rights reserved. Unauthorized commercial use prohibited.